When LLMs Meet Robotics, Things Might Get Crazy

Explore how improved large language models could fuse with robotics to transform physical automation and create a new technological era that is fascinating, frightening, and possibly insane.

Insight on AI & Prompt Engineering

RSS Feed

Explore how improved large language models could fuse with robotics to transform physical automation and create a new technological era that is fascinating, frightening, and possibly insane.

Examine the contentious debate around whether LLMs actually reason or just perform sophisticated pattern matching.

Compare probabilistic reasoning in LLMs with logical reasoning systems and understand when to use each approach.

Trace the evolution of semantic search from 1960s keyword matching to modern LLMs, and understand how these technologies built on each other.

Explore recent advances in AI voice generation and what they mean for content creation, accessibility, and the future of human-AI interaction.

Learn how to use structured JSON prompts for more precise image generation control and consistent results across AI art tools.

Explore whether LLM embedding spaces represent a universal language of meaning that transcends human-readable text.

Discover how LLMs transform language into mathematical representations, and why this numeric encoding is key to their understanding and generation capabilities.

Explore the relationship between LLMs and vector databases, and why they're complementary technologies rather than equivalent systems.

Understand what makes LLMs fundamentally different from previous AI breakthroughs and why they represent a paradigm shift in artificial intelligence.

Explore LLM jailbreaking techniques, why they work, and how to build more robust AI systems that resist manipulation.

Understand prompt injection attacks - how they work, why they're dangerous, and how to protect your AI applications from manipulation.

Understand ReAct prompting - a powerful technique that combines reasoning and acting to help AI solve complex problems through iterative thought-action cycles.

Compare three popular AI coding tools - Cursor (IDE), Cline (VS Code extension), and Lovable (web-based builder) - to find which fits your workflow.

Explore why product-led companies have a massive advantage in the AI-assisted development era, and how tools like Claude Code amplify their strengths.

Understand the Model Context Protocol (MCP) - Anthropic's open standard for connecting AI models to data sources and tools, enabling contextual AI applications.

Compare OpenAI's Codex CLI and Anthropic's Claude Code - two powerful command-line AI assistants with different strengths and use cases.

Learn how to use OpenAI's moderation endpoint to filter harmful content, protect users, and build safer AI applications.

Learn how to prompt AI to provide honest, critical feedback instead of just agreeing with everything you say - get real value, not empty validation.

Discover how to build and deploy real applications from your phone using Termius, Digital Ocean, and Claude Code - coding truly becomes location-independent.

Learn how to structure markdown files that enable powerful bulk operations with Claude Code, from multi-file edits to complex refactoring tasks.

Explore the difference between vibe coding (AI-assisted development) and vibe servering (AI-assisted server management), and why understanding both matters.

Discover metaprompting - using AI to write better prompts for AI. Learn how this meta-level technique can dramatically improve your prompt engineering workflow.

Master Chain of Thought prompting - the technique that dramatically improves AI reasoning by making the model show its work step by step.

Understand tokens - the fundamental units that AI models use to process text, and learn how they impact cost, performance, and prompt design.

Explore hidden prompts (system prompts) - the invisible instructions that shape AI behavior, and learn why they're essential for building reliable AI applications.

Discover how XML tags create structure, clarity, and powerful capabilities in AI prompts - from basic organization to advanced multi-step reasoning.

Learn how delimiters dramatically improve prompt clarity, prevent prompt injection attacks, and enhance AI response quality.

A comprehensive guide to Retrieval-Augmented Generation (RAG) - how it works, why it matters, and how to implement it effectively.

Understanding the difference between zero-shot, one-shot, and few-shot learning - essential concepts for effective prompt engineering.

A comprehensive guide to crafting effective prompts that consistently produce high-quality AI responses.

An introduction to prompt engineering - the emerging discipline of designing effective instructions for AI systems.

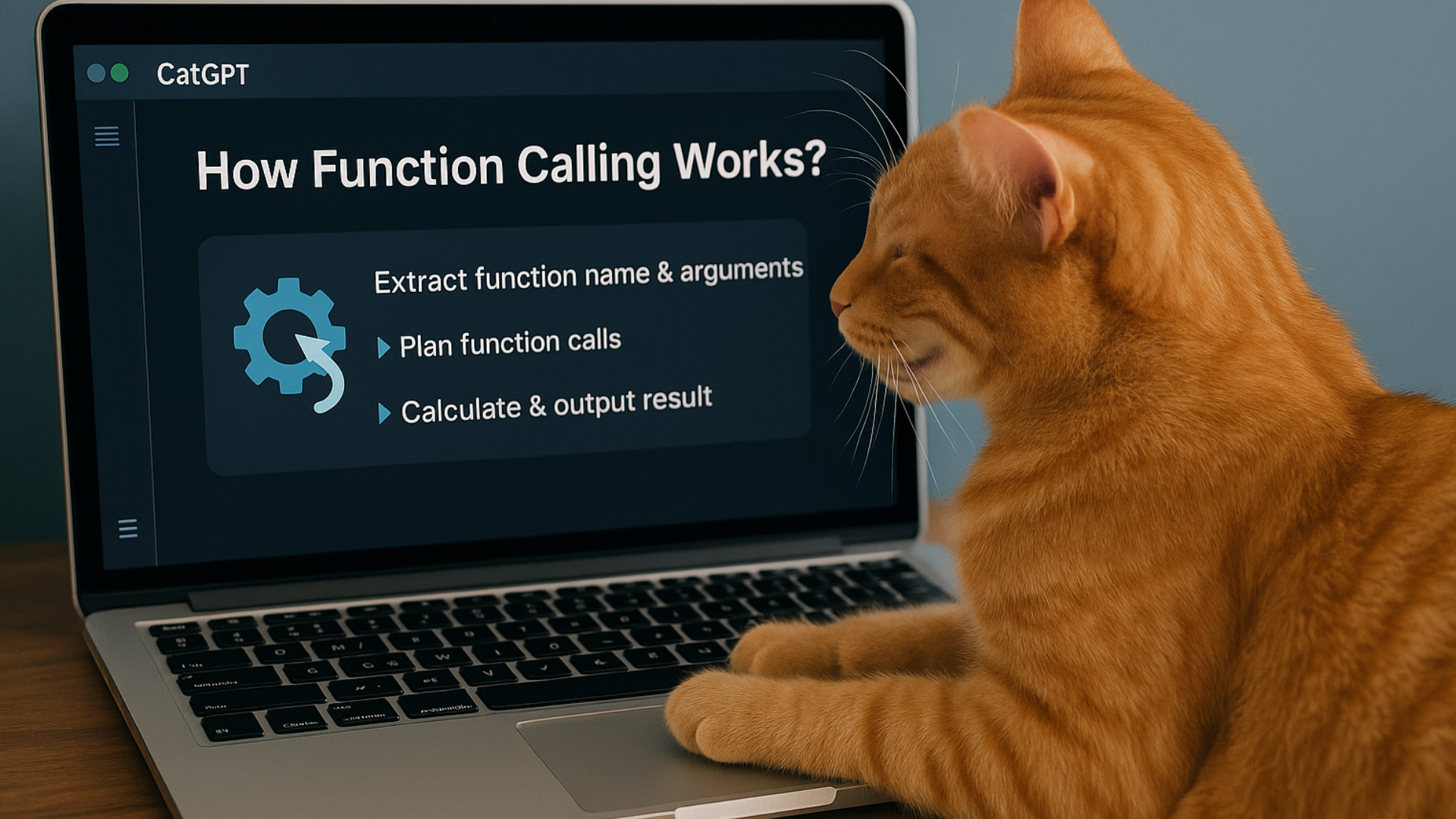

Function calling lets AI models execute code, query databases, and call APIs. Here's how it actually works and when you need it versus when simple prompting is enough.

The AI startup world is full of products that are thin wrappers around OpenAI or Anthropic APIs. That's not a problem. That's how most of the economy works.

Rate limits feel like artificial restrictions. They're not. They force you to build better systems with queuing, caching, and backpressure. Here's why unlimited access would actually be worse.

Streaming makes AI responses appear faster by showing tokens as they're generated. Here's when it actually improves UX and when it's unnecessary complexity.